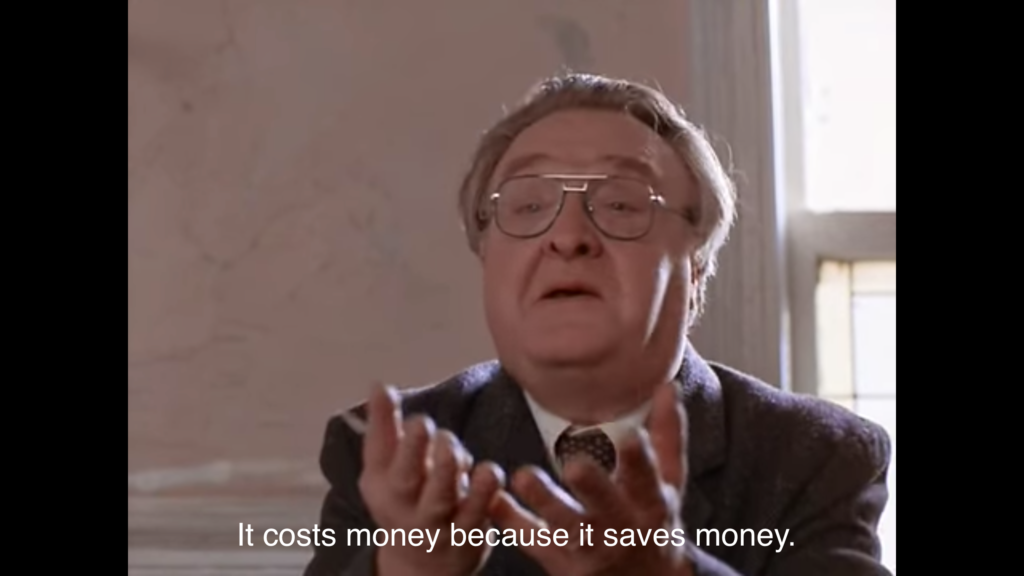

In the 1987 movie Moonstruck, Vincent Gardenia explains why expensive copper pipes are the best solution to a couple’s plumbing problem. He admits, “copper costs money” but concludes his speech with a pearl of wisdom.

This same concept applies to important technologies regularly used in software development: programming languages that need compiling versus those that are interpreted.

The software development lifecycle

When done properly, making software is a cycle of repeated steps. The cycle works like this:

- developers write code they hope will run correctly

- to run the code, it must be fed into a compiler which turns it into ones and zeros (i.e. translated into an executable form)

- the compiled program is run and tested

- if there are bugs, the developer writes more code and starts the process again

Popular and powerful programming languages like Objective-C and Java require a compiler, and the problem was (and is) that compiling is a very finicky business. Every type of data and data structure in your program will be checked by the compiler before it makes an executable binary you can run. If it finds anything unexpected or inconsistent, it will give an error and compiling will stop. Some compiler errors are quite arcane and it can take a lot of time to track down the problem.

For example, if your program declared an array to hold up to ten numbers, the compiler will make sure your code is storing only integers in that array. If it finds any code trying to store text in the array, the compiler will complain about a mismatched data type. If the compiler finds some code trying to work with the fifteenth item in the array, it will complain the array can only store ten things. When the compiler is picking these nits (called compile-time errors), it can drag out the software development lifecycle.

Over the years, programmers looked for ways to simplify and shorten these steps. The step that proved the easiest to eliminate was compiling. Without needing to compile source code into a binary executable, a programmer could go immediately from programming to testing and quickly back to programming again.

Interpreted languages provided the solution. Instead of compiling a unique binary from the program’s source code, interpreted languages use a special program called an interpreter. The source code is fed to the interpreter program, which has already been compiled. The interpreter then internally converts the source code into a runnable form and the interpreter executes it. Because the interpreter is only compiled once, and can then run any code it’s given, programmers noticed drastic increases in their productivity.

Or did they?

There is no free lunch

Programming in an interpreted language doesn’t mean your code is now bug-free. For example, let’s say we use the same ten-item array mentioned above, but now we’re writing in an interpreted language like Ruby or Python. So we can write code, then run it immediately – no compiling needed. This is what we wanted!

But this time, because there was no compiler to stop us, our program is able to store text in our array (which was made to only store numbers). So when the program runs, we find an incorrect total price that appeared when sales tax was incorrectly calculated using the word “inventory” instead of the correct number 0.05. Instead of a compile-time bug, we now have a runtime bug. So we have to track down where that text is being mistakenly inserted into the array and fix it. Maybe we also want to make sure that any item retrieved from the array is in fact a number before the sales tax is figured out, so we add some code to ensure that (sometimes called sanity-checking).

We chose an interpreted language to improve our software development process. So have we saved time? Have we made things simpler? The answer is, it depends. True, we were able to skip the time-consuming compiling step, but we had to spend time fixing the same code the compiler would have warned us about. The compiler does slow things down, but perhaps it saves us time later. Or maybe we can fix these runtime bugs faster, overall, than the time spent for long compiles several times a day. Better yet, there may be a way to optimize our compile step so it’s faster or less onerous for the development team.

It costs time because it saves time

In the real world, the decision about what programming language to use for a project is based on a variety of more important factors than how quickly a compile takes. The scenario above is a somewhat tortured way to make a point: writing quality code may take time, but it can save time later. That means that all languages aren’t created equal; not only when it comes to their capabilities, but also what type of work (and therefore what type of team) is needed for that language to be used effectively.