A significant portion of today’s mobile devices run on top of Android OS. One of the reasons for the widespread adoption of Android is its open source nature. While this allows any programmer to look beyond the basic API (referred to as the Application Framework), understanding and modifying the lower layers of the Android stack is difficult. This becomes apparent as soon as you download the huge 12 GB of Android’s source code. In addition, while the Application Framework’s Java code is accompanied with detailed comments, the remainder of the code base is not thoroughly documented (if at all). To make things worse, the undocumented part of the code base is more complex, uses intricate IPC mechanisms, and switches between programming languages.

In this article, I present the architecture of the media playback infrastructure (with Stagefright as the underlying media player). My goal is to help you, an interested reader, get a grasp of how things work behind the curtains, and to help you more easily identify the part of the code base you may want to tweak or optimize. Some useful online resources already outline certain bits and pieces of the media player architecture [1] [2], but the slideshow format of these descriptions omits a number of important details.

Overall Structure

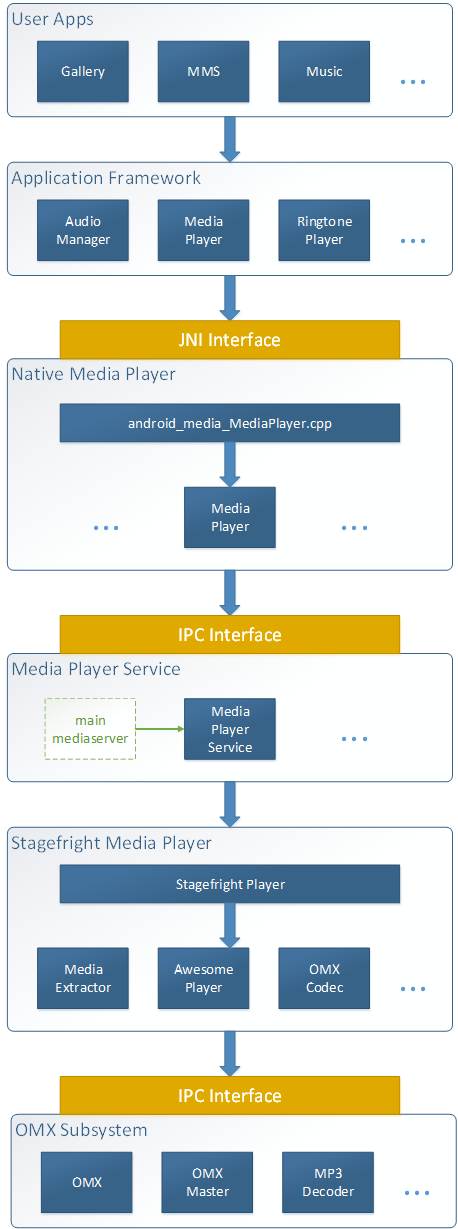

The architecture of the media player infrastructure, from the end-user apps to the media codecs that perform the algorithmic magic, is layered with many levels of indirection. The following diagram depicts the high-level architecture described below.

At the topmost layer of the architecture are the user apps that leverage media playback functionality, such as playing audio streams, ringtones, or video clips. These apps use the components from the Application Framework that implement the high-level interfaces for accessing and manipulating media content. Below this layer, things get more complicated as the Application Framework components communicate with native C++ components through JNI interfaces. These native components are essentially thin clients that forward media requests from Java code to the underlying Media Player Service via IPC calls. The Media Player Service selects the appropriate media player (in our case Stagefright), which then instantiates an appropriate media decoder, as well as fetches and manipulates the media files, manages buffers, etc. While even this high-level architecture is relatively complex, the devil is still in the details, so I will now guide you through the different subsystems.

User Apps and Application Framework

Android end-user apps, stored in the packages/apps folder, use the Application Framework’s Java classes such as AudioManager, MediaPlayer, and RingtonePlayer. These classes are stored in the frameworks/base/media/java folder, and provide intuitive interfaces for manipulating different types of media. Most of them serve only as thin wrappers to a set of underlying native functions. For example, MediaPlayer methods perform simple native invocations (e.g., Java MediaPlayer.start()just invokes the native start() method written in C++). By contrast, AudioManager performs several additional functions (monitoring the volume keys and the vibration settings). All of AudioManager‘s audio-playing requests, however, are forwarded to AudioService, which then either directly invokes native methods or invokes the Java MediaPlayer, with very little other functionality. In a nutshell, the requests from the user apps end up being mapped to a very similar set of native functions, and, for example, changing the master volume, playing a ringtone, or listening to a radio stream all involve an invocation of the native libmedia/MediaPlayer::start() command.

Native Media Player Subsystem

Once a playback request goes through the JNI interface, the control flow and dataflow of the requests become more difficult to track inside the source code due to a lack of documentation and the intricate invocation mechanisms. The JNI interface connects the Application Framework with C++ implementations of some methods located in the folder frameworks/base/media/jni. The most frequently invoked native methods are located in android_media_MediaPlayer.cpp file, which instantiates a C++ MediaPlayer object for each new media file and routes requests from the Java MediaPlayer objects to their C++ counterparts. The implementations of these C++ classes are located under frameworks/av/media, while their interface definitions can be found in frameworks/av/include/media. If you expect that these classes implement the actual media playback functionalities, you will be disappointed to hear they are only adding yet another level of indirection, serving as thin IPC clients to the underlying lower-level media services. For example, MediaPlayer::start() does nothing more than route invocations to the IMediaPlayer interface, which then performs an IPC invocation transact(START, data, &reply) to start the playback.

Media Player Service Subsystem

The IPC requests from the Native Media Player Subsystem are in turn handled by the Media Player Service subsystem (the subsystem mostly comprises C++ classes in the frameworks/av/media/libmediaplayerservice folder). This subsystem is initialized in the main() method of frameworks/av/media/mediaserver/main_mediaserver.cpp, which includes startup of multiple Android servers such as AudioFlinger, MediaPlayerService (relevant for our discussions), and CameraService. The instantiation of the Media Player Service subsystem involves creation of a MediaPlayerService object, as well as instantiation and registration of factories for the built-in media player implementations (NuPlayer, Stagefright, Sonivox). Once up and running, MediaPlayerService will accept IPC requests from the Native Media Player subsystem and instantiate a new MediaPlayerService::Client for each request that manipulates media content. To play the media, Client has a method createPlayer that creates a low-level media player of a specified type (in our case StagefrightPlayer) using the appropriate factory. The subsequent media playback requests (e.g., pausing, resuming, and stopping) are then directly forwarded to the new instance of StagefrightPlayer.

Stagefright Media Player

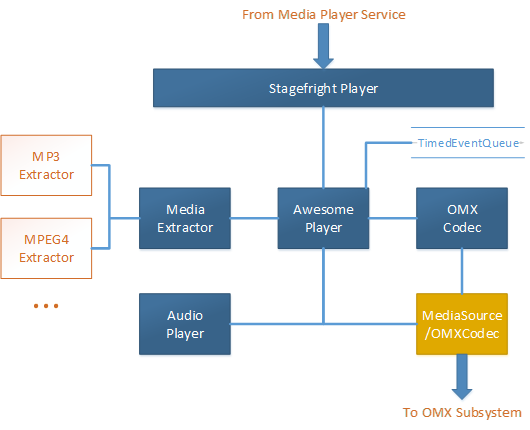

StagefrightPlayer class is a thin client to the actual media player named AwesomePlayer. The Stagefright Media Player subsystem, located in the folder frameworks/av/media/libstagefright, implements the algorithmic logic (unsurprisingly, many of the files have sizes in the range of thousands of SLOC). The detailed architecture of this subsystem is depicted in the following figure.

AwesomePlayer implements the executive functionality, which includes connecting video, audio, and video caption sources with the appropriate decoders, playing the media, and synchronizing video with the audio and captions. At initialization, AwesomePlayer‘s data source is set up (setDataSource command), which internally requires communication with the MediaExtractor component. MediaExtractor invokes the appropriate data parsers in accordance to the media type (e.g., frameworks/av/media/libstagefright/MP3Extractor.cpp for MPEG/MP3 audio). The returned memory reference to the data obtained in this manner is then used for media playback.

To prepare for playback, AwesomePlayer leverages the OMXCodec component (implemented by the static methods of frameworks/av/media/libstagefright/OMXCodec.cpp) to set up the decoders to use for each data source (audio, video, and captions, naturally, utilize separate codecs). The decoder functionality resides in the OMX subsystem (Android’s implementation of OpenMAX, the API for media library portability), where handling of memory buffers, translation into raw format, and similar low-level operations are performed. The subsystem, implemented primarily with the classes located in the frameworks/av/media/libstagefright/omx and frameworks/av/media/libstagefright/codecs folders, is complex on its own and will not be covered in this article. Stagefright Media Player components communicate with OMX via IPC invocations. The implicit client for these invocations is the MediaSource/OMXCodec object created by OMXCodec component and returned to AwesomePlayer.

AwesomePlayer finally handles playing, pausing, stopping, and restarting media playback, while doing so in a different manner depending on the type of media. For audio, AwesomePlayer instantiates and invokes an AudioPlayer component that is used as a wrapper for any audio content. For example, in case only audio is played, AwesomePlayer simply invokes AudioPlayer::start() and remains idle until the audio track finishes or a user submits a new command. During the playback, AudioPlayer uses the MediaSource/OMXCodec object to communicate with the underlying OMX subsystem.

For video, AwesomePlayer invokes AwesomeRenderer‘s video rendering capabilities, while also directly communicating with the OMX subsystem through MediaSource/OMXCodec object (there is no proxy such as AudioPlayer in the case of video playback). In addition, AwesomePlayer is in charge of audio and video synchronization. For this reason, AwesomePlayer employs a timed queuing mechanism (TimedEventQueue) that continuously schedules rendering of buffered video segments. When a queued timed event’s deadline is reached, TimedEventQueue invokes AwesomePlayer‘s callback functions that perform bookkeeping and make sure that everything is running properly and that audio and video are in sync (AudioPlayer is invoked to check the state and timing of audio playback). This AwesomePlayer‘s functionality is implemented in the AwesomePlayer::onVideoEvent() method that, following the processing and synchronization, invokes AwesomePlayer::postVideoEvent_l() to schedule the next video segment. Similar functions are implemented in other callback functions such as onBufferingUpdate, onCheckAudioStatus, onPrepareAsyncEvent, and onStreamDone, which are invoked by TimedEventQueue when processing media playback.

While this article qualifies as a fairly lengthy blog post, I only scratched the surface of the complex media playback functionality. Thus, if you find something unclear or would like to discuss the architecture further, please do not hesitate to comment or contact us directly. Also, I would like to invite you to read a separate article that provides a set of tips & tricks for manually recovering a software architecture that uses the media player as an example.